Controlling robots could get a lot more easy and intuitive using new tech that reads a controller's brainwaves and responds to hand gestures and not just programmed commands.

A new development at MIT has researchers controlling machines through just thought and gestures to try and make human-robot communication a lot simpler. Scientists at MIT's Computer Science and Artificial Intelligence Laboratory (CSAIL) are working on a system that can instantly correct robots and guide them with just thoughts and possibly a wave of a finger.

Till now, robots have always been trained using explicit commands and programming, said a statement issued by the research team. Even voice controlled robots and AI systems need specific coding that will give them language skills. Both methods are time and resource-consuming as well as not very natural in the way they work, say researchers.

The team is currently improvising their past work on a system that focused on a simple binary-choice based activities. However, the current research expands its scope into "multiple-choice" tasks, thus opening up a whole new world of possibilities as for how human workers could manage a whole team of robots.

"We'd like to move away from a world where people have to adapt to the constraints of machines," says CSAIL director Daniela Rus, who supervised the work.

"Approaches like this show that it's very much possible to develop robotic systems that are a more natural and intuitive extension of us."

The system works by scanning brain activity and it can even detect in real time when the controller notices any errors as robots carry out their tasks. The system measures muscle activity and the person can also make hand gestures while scrolling through options and then select one for the machine to carry out.

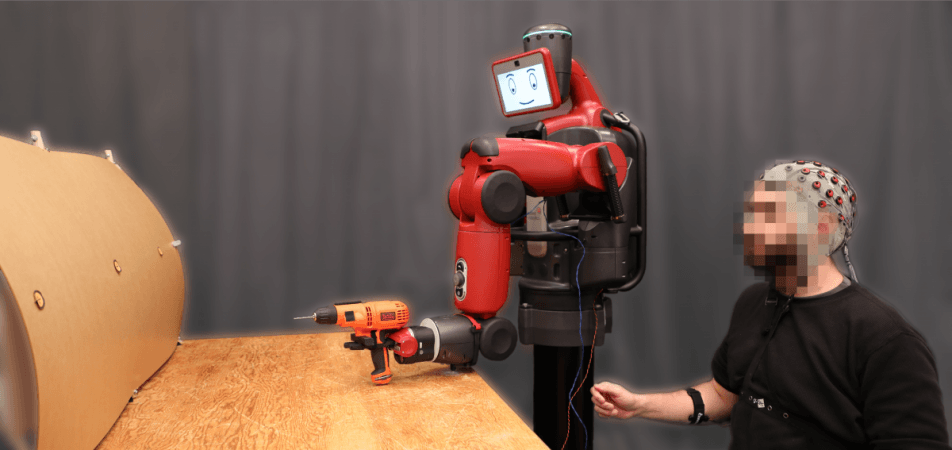

Researchers demonstrated a system, where one robot picks up a power drill and then moves toward a target, one of possible three and drill through. The system works in such a way that even those who are not familiar with it, or not used to the system were able to easily operate it. This means that it might be possible for the system to be integrated into real world settings, without too much training.

"This work combining EEG and EMG feedback enables natural human-robot interactions for a broader set of applications than we've been able to do before using only EEG feedback," says Rus, who supervised the work."By including muscle feedback, we can use gestures to command the robot spatially, with much more nuance and specificity."

To create this tech, the team harnessed electroencephalography (EEG) to read brain activity and electromyography (EMG) for muscle activity by simply applying a few electrodes directly on the controller's forearm and scalp. "By looking at both muscle and brain signals, we can start to pick up on a person's natural gestures along with their snap decisions about whether something is going wrong," says Joseph DelPreto one of the lead authors in the paper.

"This helps make communicating with a robot more like communicating with another person." He said the machines will adapt to the user instead of the user adapting to the controls, so it is much like human interaction under this system.