Google Pixel phones are great devices and they come bundled with a plethora of features. At the Google I/O 2017, the web search titan introduced Google Lens – a visual search feature that uses the phone's camera to tell you what you're looking at. And it's finally time the users of Pixel smartphones get a taste of it.

There are two ways to access Google Lens in Pixel phones – from the Photos and using Google Assistant. In an official blog post dated November 21, Google said that all Pixel smartphones, including Pixel, Pixel XL, Pixel 2 and Pixel 2 XL, will get Google Lens in the Assistant "over the coming weeks."

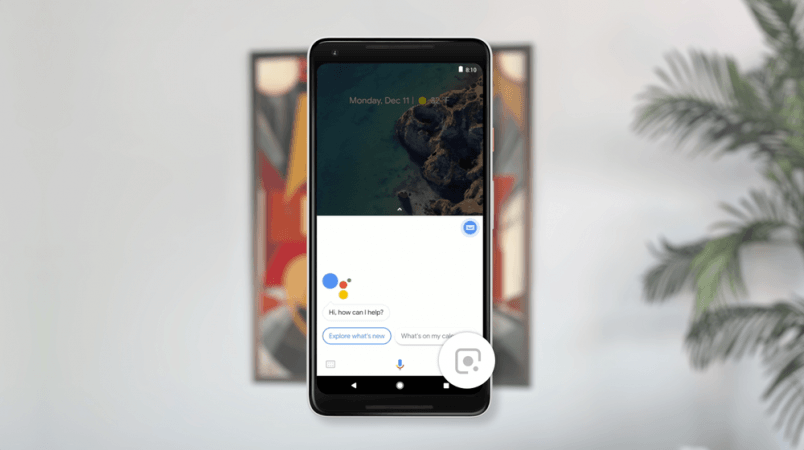

"Google Lens in the Assistant will be rolling out to all Pixel phones set to English in the U.S., U.K., Australia, Canada, India and Singapore over the coming weeks. Once you get the update, go to your Google Assistant on your phone and tap the Google Lens icon in the bottom right corner," Ibrahim Badr, Google Assistant's associate product manager, wrote in the company's blog.

Google Lens uses machine learning and the company's advancements in computer vision to offer quick help on what users see in real time. Be it a painting hanging on the wall or a monument, Google is stepping in to help you see the world with intellect.

We'll be reviewing the Google Lens when it hits our Pixel 2 XL, but here are a few things that it can help you with.

Google Lens can:

Save information from business card just by pointing the phone's camera at it

Follow URLs

Call phone numbers

Navigate to addresses

Recognise landmarks and learn about their history

Learn about a movie just by pointing the camera at the poster

Get ratings and synopsis of a book (literally an example of judging the book by its cover)

Add events for a new movie on its release

Look up products by barcode

Scan QR codes & more.

So keep an eye out for that update notification on your Pixel smartphone to start using the Lens. We know we will be.