Over the years, Facebook, the leading social networking site based on monthly unique visitors, has changed the way in which people communicate. It allows people to stay in touch with friends, relatives and acquaintances wherever they are in the world. It has also reunited lost family members and friends. But, off late, the social media giant has witnessed a huge spike in people using the newly-introduced Live feature to show their frustration with self-harm and suicide.

Also read: Facebook working on new feature to stop impersonation

In a recent study, Facebook said that a suicide is reported every 40 seconds across the world, and that suicide is the second leading cause of death for 15-29 year olds.

To put a stop to this disturbing trend, Facebook with help of psychiatrists from top mental health organisations such as Save.org, National Suicide Prevention Lifeline, Forefront and Crisis Text Line, and with input from people who have personal experience thinking about or attempting suicide, has developed a Artificial Intelligence (AI)-based tool in its social media site. Facebook believes that the tool can recognise subtle signs of people of having suicidal tendencies and prevent them from harming themselves.

Here's how Facebook's AI-based suicide prevention tool works:

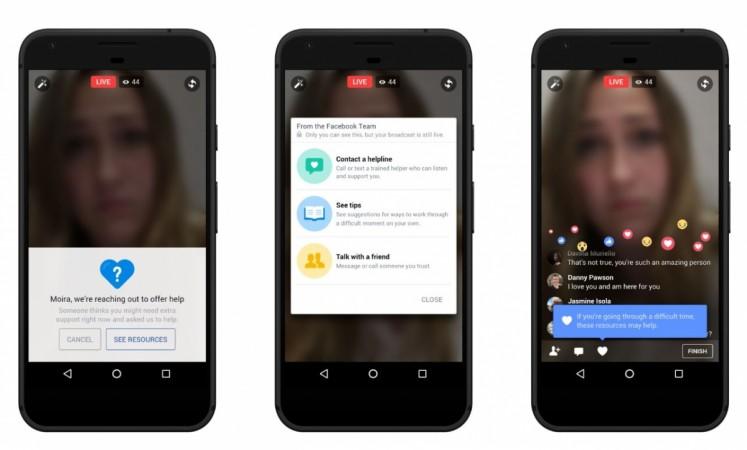

The person sharing the live video will be able to see a set of resources on their screen. They can choose to reach out to a friend, contact a helpline or see tips.

People can also connect with Facebook's crisis support partners over Messenger. They will be able to see the option to message someone in real-time, directly from the page or through the suicide prevention tool.

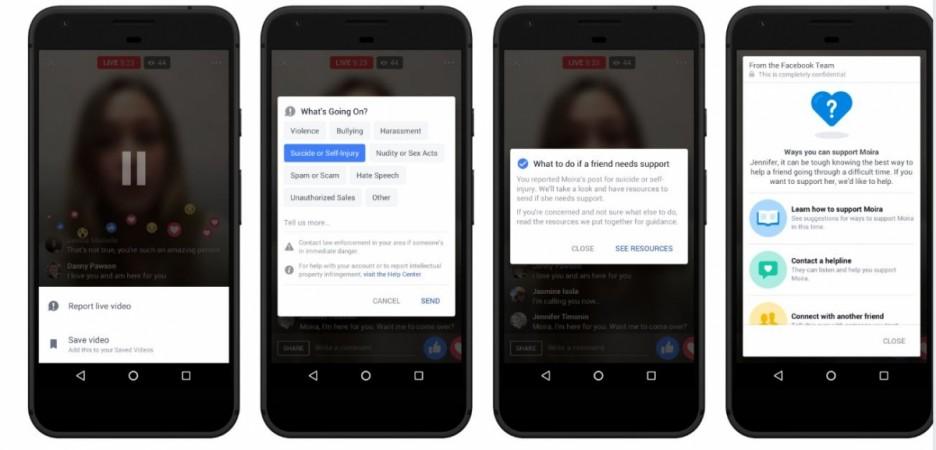

Another option is for the viewers watching the Live video. If they believe the person in the live video feed is trying to self-harm, they can intervene and talk to them in real-time and also report it to Facebook's partner organisation to counsel the victim recover from depression.

Facebook has set up a dedicated 24/7 team, which will review reports that come in and thanks to artificial intelligence, they will be able prioritise serious reports like suicide and escalate the issue, and reach out to the concerned person or his/her loved ones.

As of now, it is being tested in the United States and Facebook will continue working closely with suicide prevention experts to understand other ways they can use this technology to help provide support and also extend it all regions.

After the safety check feature (for natural disaster), Facebook suicide prevention system, is a noble tool, which will certainly save precious lives.