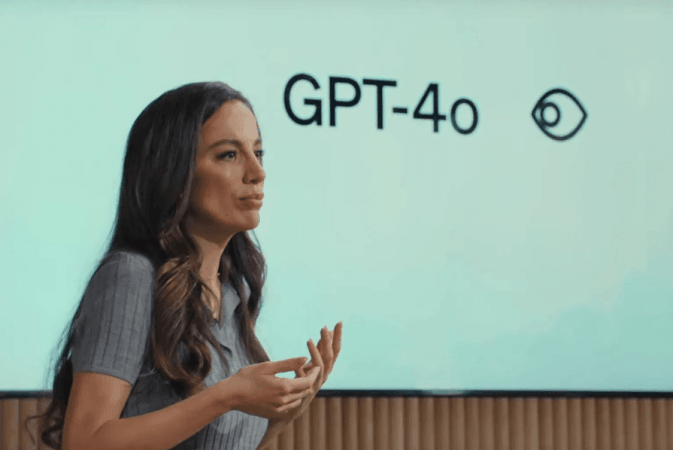

OpenAI's CTO Mira Murati released its heavily anticipated spring update on Wednesday, which introduced GPT-4o and made more tools available for free while using ChatGPT.

GPT-4o ("o" for "omni") is the company's new flagship model that can "reason across audio, vision, and text in real time." Its release is likely to prove ground-breaking in terms of the integration of 'human sensory' related manifestations in AI technology.

With a host of enhanced features and capabilities, including a desktop application, the roll out is already underway, with its expansion to be completed in a phased manner over the coming weeks and months.

Some Exciting and Useful Features

The OpenAI team led by Murati has released a series of videos (including a blooper reel), demonstrating the features of GPT-4o, which hold definite potential to have a wide array of uses, including for day-to-day activities. Here are some of features:

Voice & Audio: On one hand, the AI has the real time capability to translate conversations from different languages, which will benefit human-to-human interactions in a globalised world. The current model now supports more than 50 languages across sign-ups, logins and more. On the other hand, the model is designed to engage in emotive conversations and narration replicating human-like expressive tones, thereby moving the human-AI interaction to another level.

This new AI model is backed by an enhanced performance capacity, which is almost similar to human response times by "responding to audio inputs in as little as 232 milliseconds, with an average of 320 milliseconds."

Vision: Using its 'vision'-related features, GPT-4o comes with the unique ability to assess and gauge human emotions through facial detection, making for interactive human-AI conversations. Further, when provided with code, graphics or images, its features are impressive and likely to be of high utility across various sectors. The AI is demonstrated as being able to describe and understand these visual cues in a simplified manner, as well as provide interpretations and analysis based on situational contexts.

Text: In terms of the text features, the developments have largely been in the form of improved performance. There is a "significant improvement on text in non-English languages, while also being much faster and 50% cheaper" in API (the means by which two software components communicate with each other). The AI model is designed and updated to "generate, edit, and iterate with users on creative and technical writing tasks, such as composing songs, writing screenplays, or learning a user's writing style".

Check out these videos by OpenAI to give you a fair idea about GPT-4o's capabilities:

Two GPT-4os interacting and singing pic.twitter.com/u9VuZoroxm

— OpenAI (@OpenAI) May 13, 2024

Realtime translation with GPT-4o pic.twitter.com/J1BsrxwYdE

— OpenAI (@OpenAI) May 13, 2024

Happy birthday with GPT-4o pic.twitter.com/OuEkfQsap9

— OpenAI (@OpenAI) May 13, 2024

Dad jokes with GPT-4o pic.twitter.com/8w1coXBRGH

— OpenAI (@OpenAI) May 13, 2024

Dad jokes with GPT-4o pic.twitter.com/8w1coXBRGH

— OpenAI (@OpenAI) May 13, 2024

Sarcasm with GPT-4o pic.twitter.com/APrYJMvBFF

— OpenAI (@OpenAI) May 13, 2024

Math problems with GPT-4o and @khanacademy pic.twitter.com/RfKaYx5pTJ

— OpenAI (@OpenAI) May 13, 2024

Interview prep with GPT-4o pic.twitter.com/st3LjUmywa

— OpenAI (@OpenAI) May 13, 2024

Say hello to GPT-4o, our new flagship model which can reason across audio, vision, and text in real time: https://t.co/MYHZB79UqN

— OpenAI (@OpenAI) May 13, 2024

Text and image input rolling out today in API and ChatGPT with voice and video in the coming weeks. pic.twitter.com/uuthKZyzYx

Live demo of GPT-4o realtime conversational speech pic.twitter.com/FON78LxAPL

— OpenAI (@OpenAI) May 13, 2024

Live demo of GPT-4o realtime conversational speech pic.twitter.com/FON78LxAPL

— OpenAI (@OpenAI) May 13, 2024

Say hello to GPT-4o, our new flagship model which can reason across audio, vision, and text in real time: https://t.co/MYHZB79UqN

— OpenAI (@OpenAI) May 13, 2024

Text and image input rolling out today in API and ChatGPT with voice and video in the coming weeks. pic.twitter.com/uuthKZyzYx

Future and Expansion

GPT-4o expands the prospects for the future of inter-AI interactions between devices. With the relevant prompts, the AI model is displayed to effectively engage in conversations with other GPT enabled devices using voice, vision and text capabilities.

The new release also provides scope for building supportive experiences with "Memory", which is a feature that allows users to retain previous conversations and content to make "future chats more helpful", with due priority given to the user's control over such "memory".

Recognizing the need, OpenAI has taken a step towards transparency by acknowledging that certain features, such as the reproduction of voice content within the technology, do pose risks. Such concerns warrant a degree of safety and risk mitigation measures, which the company is actively pursuing.

Overall, the developments introduced by GPT-4o, particularly in free tier access are headed towards taking human-AI interactions to new limits, while spurring innovation and competition further between the Big Tech giants.