![[Representational Image] AI-based Suicide Detection system goes live on Facebook in multiple regions: Here’s how it works In Picture: The Facebook logo is displayed on their website in an illustration photo taken in Bordeaux, France, February 1, 2017. The Facebook logo is displayed on their website](https://data1.ibtimes.co.in/en/full/641335/facebook-logo-displayed-their-website.jpg?h=450&l=50&t=40)

Facebook, in a bid to improve its user-experience, rolled out in mid-2016 the Facebook Live feature, which allowed users to stream live videos online. It was a really good initiative, in that acted as a personalised interactive communication platform.

But, it took an ugly turn when people started live-streaming suicides, self-infliction of injuries and sometimes harming others, while onlookers watched helplessly.

Facebook, to put a stop to the disturbing trend, in March this year announced setting up of an Artificial Intelligence (AI)-based suicide-prevention tool in the US region.

It has been created with inputs from psychiatrists from top mental health organisations such as Save.org, National Suicide Prevention Lifeline, Forefront and Crisis Text Line, and from people who have had personal experience with thinking about or attempting suicide.

Now, the social media giant is finally rolling out the service on Facebook in several regions across the globe.

"We are starting to roll out artificial intelligence outside the US to help identify when someone might be expressing thoughts of suicide, including on Facebook Live. This will eventually be available worldwide, except the EU," Guy Rosen, VP of Product Management, said in a statement.

Here's how it works:

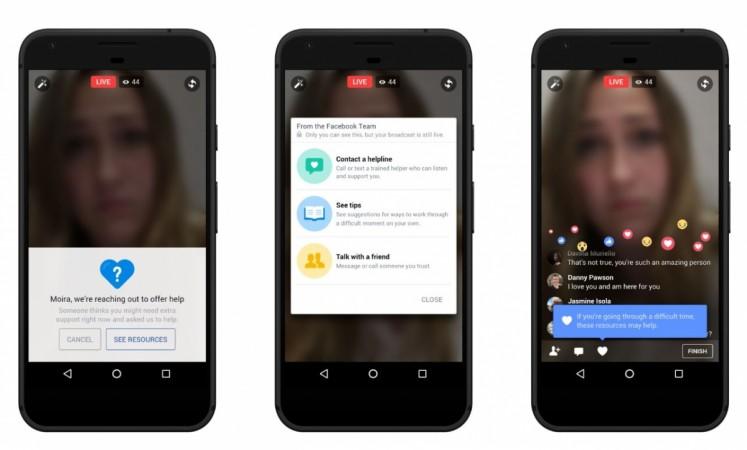

A suicidal person sharing a live video will be able to see a set of resources on their screen. They can choose to reach out to a friend, contact a helpline or see tips.

People can also connect with Facebook's crisis support partners over Messenger. They will be able to see the option to message someone in real time, directly from the page or through the suicide prevention tool.

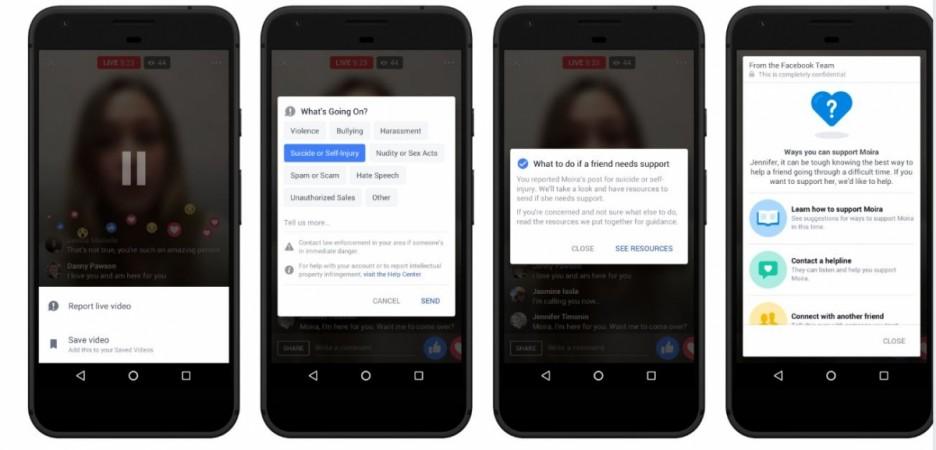

Another option is for viewers watching the Live video. If they believe the person in the video is trying to self-harm, they can intervene and talk to them in real time and also report it to Facebook's partner organisations to help the victim recover from depression.

Facebook has set up a dedicated 24x7 team (in select regions) to review reports that come in, and thanks to artificial intelligence they will be able to prioritise serious reports like those on suicide, escalate the issue, and reach out to the concerned person or his or her loved ones.

Facebook has said it will continue working closely with suicide prevention experts to understand other ways to use this technology to help provide support faster.

The new feature has the potential to save millions of lives, but the round-the-clock monitoring of users flagged by the suicide-watch tool raises questions on the right to privacy; this is exactly why the Facebook AI-based tool is facing resistance in the European Union.

To allay privacy concerns, Alex Stamos, chief security officer of Facebook, has said the company has no ill-intention and will make use of AI and data for good purposes.

"The creepy/scary/malicious use of AI will be a risk forever, which is why it's important to set good norms today around weighing data use versus utility and be thoughtful about bias creeping in. Also, Guy Rosen and team are amazing, great opportunity for ML engs to have impact, [sic]" Alex Stamos, chief security officer, Facebook, said to a user on Twitter.

The creepy/scary/malicious use of AI will be a risk forever, which is why it's important to set good norms today around weighing data use versus utility and be thoughtful about bias creeping in. Also, Guy Rosen and team are amazing, great opportunity for ML engs to have impact. https://t.co/N9trF5X9iM

— Alex Stamos (@alexstamos) November 27, 2017

Stay tuned. Follow us @IBTimesIN_Tech on Twitter for latest updates on Facebook products.